Augmented Cinema

Sam Hill

24th October 2011

Last night I saw The Matrix Live at the Royal Albert Hall – a showing of the original 1999 motion picture, but with a live orchestra performing the score. It was phenomenal. The NDR Pops Orchestra perfectly captured the epic melodrama of Don Davis’ original soundtrack, with it’s relentless use of violins, and the big brass/ timpani crescendos. The venue was perfect for it and the film itself had aged quite well for such a stylised piece of science fiction.

The experience was similar to a treatment of 2001: A Space Odyssey by the Philharmonia Orchestra and Philharmonia Voices, which I caught last year at the Royal Festival Hall and was absolutely bowled over by. It was brilliant and haunting and an unparalleled sensory experience. Loads of other films (Star Wars in Concert for example) have received a similar treatment, and cinematic performances have diversified in many other ways too.

This brings to mind a number of questions about what makes the cinematic experience brilliant, as it is, and when it’s appropriate to toy with the format.

It might be helpful to analyse what the two film have in common to see why they were chosen:

- To start with, 2001 and The Matrix are both excellent, popular movies with incredible scores.

- They have a large replay value.

- They are oscar winning classics and have endured long enough to remain relevant.

- They are both unashamedly ostentatious and ambitious works of cinema.

I doubt this style of adaptation would work for films that do not obey theses criteria, as good as they still might be. Shrek (2001) for example, is a perfectly good film – funny, innovative and enduring, but it probably lacks the gravitas to warrant a full blown orchestra. Though new, Tinker Tailor Soldier Spy is, at the time of writing, a critically acclaimed release; but to build a proximate intervention between it and the audience would be a disservice: the movie-goer has not yet seen it as it was meant to be seen, so it shouldn’t be tampered with yet.

A rough logic is beginning to fall in to place.

Already Good

Going to the cinema is a fairly unique activity: it can only really be considered a semi-social event, seeing as talking is actively discouraged. Despite this, it’s one of the most popular public leisure activities of the last century. In a way, it’s incredible to think that though we can spend most of our working day looking at screens, and have the opportunity to go home and watch anything we want off more screens from the comfort of a sofa, we consider it a treat to instead occasionally leave the house and view another, bigger screen, at a relatively premium rate. There must be good reasons for this, surely?

Progress in delivering new experiences is important, but if the following assets of cinema are undermined too far then any intervention will be rendered distracting rather than immersive; a diminishment of the cinematic experience, not an augmentation.

What makes cinema great? –

- First off, there is the complete, unavoidable immersion – the film stretches to the edge of the viewer’s peripheral vision and the audio overrides all other noise.

- It’s romantic – the ritual of the popcorn, the trailers, the sense of shared experience and the analytical post-drinks.

- It’s an easy, comfortable and passive activity to take part in, the viewer need only sit, look and listen – sometimes that’s all we want to do.

- Finally, there’s the quality, of both narrative and production. Cinema is arguably the king of story-telling and continues to remain at the very frontier of our qualitative expectations in so many respects.

Future Cinema

(photo credit: Saulius Patumsis via Flickr)

I mentioned cinema performances have diversified in other ways. One group that seem to consistently nail immersive, film-centric nights are Future Cinema. As their site reads:

Future Cinema is a live events company that specialise in creating living, breathing experiences of the cinema…Future Cinema aim to bring the concept of ‘experience’ back to the cinema-going world.

Specialising in bringing events to life through a unique fusion of film, improvised performances, detailed design and interactive multimedia, Future Cinema create wholly immersive worlds that stretch the audience’s imagination and challenge their expectations.

The activities they organised for Blade Runner, One Flew Over The Cuckoo’s Nest, Top Gun and Watchmen have become somewhat legendary in London. Future Cinema are currently the authority on cinematic experience.

What Else?

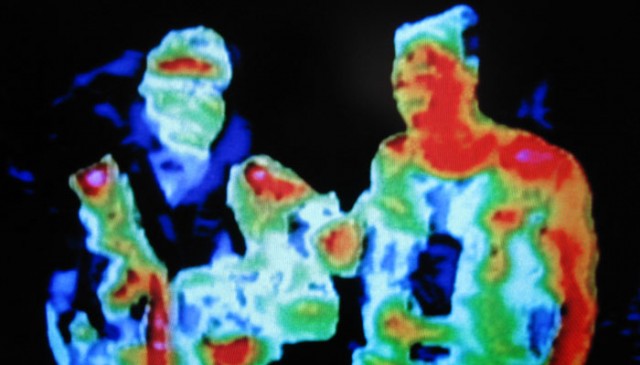

As well as use of theatre to blur the edges of the screen, there are further tools both upcoming and established, that are employed to affect our cinema experience. 3D glasses for example, faced their first seriously commercial acid test with Avatar (2009), but seem now to be well established. The super-wide IMAX screenings are arguably even more immersive than conventional cinema and showings are often very popular. New and unusual locations for temporary cinemas are always cropping up, which provide a break of style from the multiplexes we’re used to. Olfactory stimulation (“smell-o-vision”) is a gimmick occasionally used with films for kids (see Spy Kids 4 in 4-D Aroma-scope(2011)) and in a dozen or so theme parks internationally they go a little further with a show called Pirates 4-D, a slightly cheesy film (starring Eric Idle and the late Leslie Nielsen) with “4-D effects” involving water cannons, bursts of air, vibrating seats and wires which push against the viewers feet

A friend described once how he went to the cinema to see a preview of Danny Boyle’s Sunshine (2007), a film set on a space ship heading towards the sun. He saw it in the middle of the 2007 summer heatwave, and the cinema’s air conditioning broke down. Sweating as he sat, he didn’t know if he was a victim of a PR stunt or was suffering an onset of psychosomosis caused by the film. In any case, the experience stayed with him.

Edit (I): London Dungeon have further strained the idea of extra-“dimensional” cinema by introducing a 5D ride – ‘Vengeance‘. This includes 3D vision, a number of techniques similar to Pirates 4-D (air blasts, water sprays, vibrations etc.), and laser-sighted pistols which allow the whole audience to play a cooperative, interactive game onscreen.

Edit (II): Another phenomenon that deserves looking at is audience-initiated or cinema-facilitated activity associated with certain cult films. The Room (2003), often cited as the “best, worst film ever made” serves as a really good example. A ritual has grown around the film – the audience join in with the dialogue, greet the characters as they appear, shout satirical comments and throw plastic spoons at the screen. The effect is that one of the worst films ever produced allows for one of the most energetic and entertaining cinematic experiences possible. In a similar vein, Grease, Rocky Horror and Sound of Music are often shown in independent cinemas on special sing-a-long nights, and tend to feature a degree of cosplay.

An infamous clip from "The Room":