Earphones and Selective Reality

Sam Hill

20th November 2011

It’s feasible an average commuting city worker might wear earphones between 5 and 12 hours a day. In some places they’re ubiquitous – on the train, in the office, on the high street – so much as to have become invisible.

This is fine of course – it’s not a criticism, just an observation. Personal experience reveals journeys are less stressful if the sound of a baby crying can be blocked; work is achieved more efficiently without the ambient distractions of an open-plan office.

But the observation does come with a hefty question in tow. It’s equally typical that the aforementioned worker might spend up to 15-16 hours a day looking at screens, but there is a significant difference: screens are not all encompassing. They can be looked away from, or around, and we can shut our eyes. Conversely, personal headphones are supposed to be all encompassing; they are supposed to override all ambient noise.

What does it mean then, to block out the world around you: to usurp an important link to one’s environment for so much of the time?

Context

The personal stereo is about 25 years old and has gone through multiple format changes. Significantly, the MP3 player massively opened up the potential for people to carry their “entire” music collections with them. Another (slightly overlooked) innovation has been Spotify for mobile, which allows someone to listen to any song they can call to mind from practically any location through their smartphone, 3G and ‘the cloud’. Even making allowances for licensing and signal strength, that’s an incredible thought isn’t it? Any song, any place, any time. From prehistory up until 150 years ago, the only way to hear music was to be in the same space as the instrument. There is an incredibly liberating cultural power that comes with the tech we now wield.

Voluntary Schism from Reality

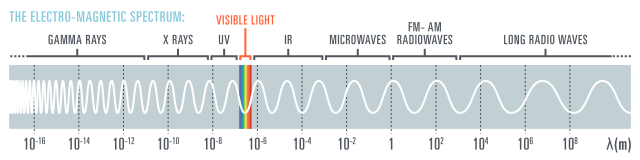

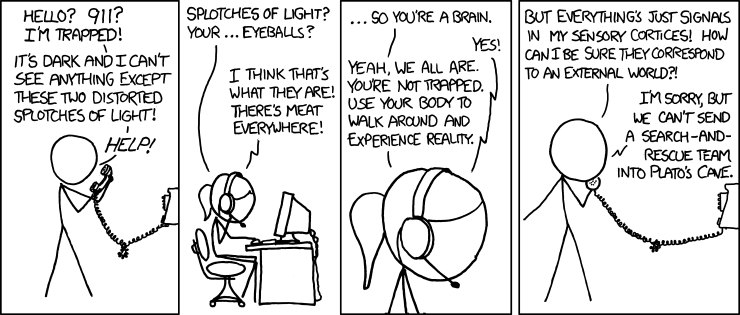

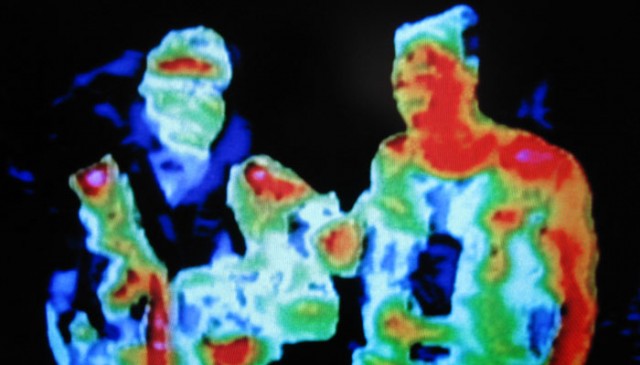

To take a critical sensation like hearing and hack it’s primarily informative/exploratory role to instead supply entertainment will certainly have a significant effect on one’s perception of reality. Granted, ‘reality’ is a weasely, subjective term, but the choice will still affect an individual’s capacity to perceive their immediate environment. Critically, the user of earphones has made a choice: they are listening to what they want to, regardless of whether it’s what she should listen to. They have been granted the power to exert an amount of control on sensory input, and how they engage with their environment. Whether or not there is an experiential ‘compromise’ going on is contentious.

For example, consider a typical 40 minute train commute. Coincidently, 40 minutes is the approximate length of time of an average album. So within a week’s commute it might be possible to listen to roughly 10 new albums. Doing so would impart a constant, fairly rich supply of fresh experience. On the other hand, listening instead to the daily sounds of a train carriage would probably be emotionally and sensationally lacking, most of the time. However, occasionally the ambient noise of a journey might yield (experiential) gems: eavesdropping on an argument, a phone call or the ramblings of an alcoholic.

Most likely, the album-listening route would be more rewarding in the long term, and so within this context could be considered experientially condonable. But is this true beyond the commute?

Boundaries

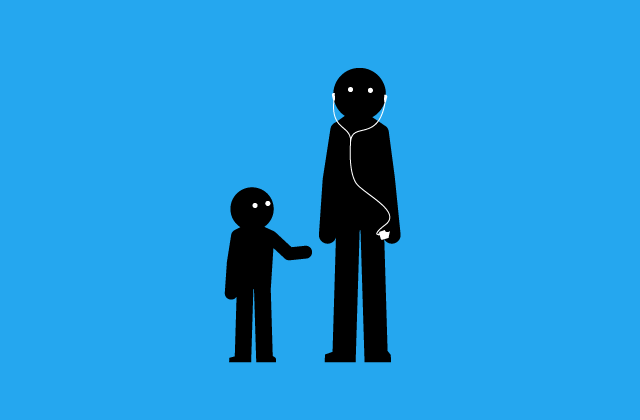

Has society had time to adjust to the power of being able to limit depth of engagement with the physical world? Do we understand the point at which the benefit becomes a hurdle – when a delivery mechanism for experience becomes an obstacle? The thought first occurred to me when I saw a father carrying a toddler in his arms through a park. The father had white earphones hanging from his ears and a vacant expression. The kid was babbling and humming and blowing raspberries at his dad but he was completely oblivious. The sight, an abuse of technological power, made me instantly uncomfortable. The fact this man had wilfully placed a barrier between himself and his son, to the detriment of them both, made me incredibly angry, actually. In this instance it wasn’t strangers on the tube being phased out of attention but immediate family. It seemed wrong by every measure of quality.

I’ve also been amazed to see cyclists weave through traffic whilst listening to music. In my experience it seems necessary to dedicated every possible faculty to cycling in a built-up environment. Granted, there might be marginally more experiential value in cycling to music, but is the pay-off worth the risk of failing to identify peripheral hazards? After all, a premature death will reduce an individuals net lifetime experience acquired, quite drastically.

By Analogy

In a recent workshop we held at Goldsmith’s College, a design student ran a quick experiment to limit their exposure to unpleasant smells. They subverted their olfactory sense by keeping a perfumed cloth over their nose whilst walking through bad smelling places.

The student realised within a few hours that living with a single abstract ‘pleasant’ smell was less desirable than having access to countless neutral and unpleasant odours – odours which were still relevant and contextually grounded.