Taxonomy of Interaction

Sam Hill

4th October 2012

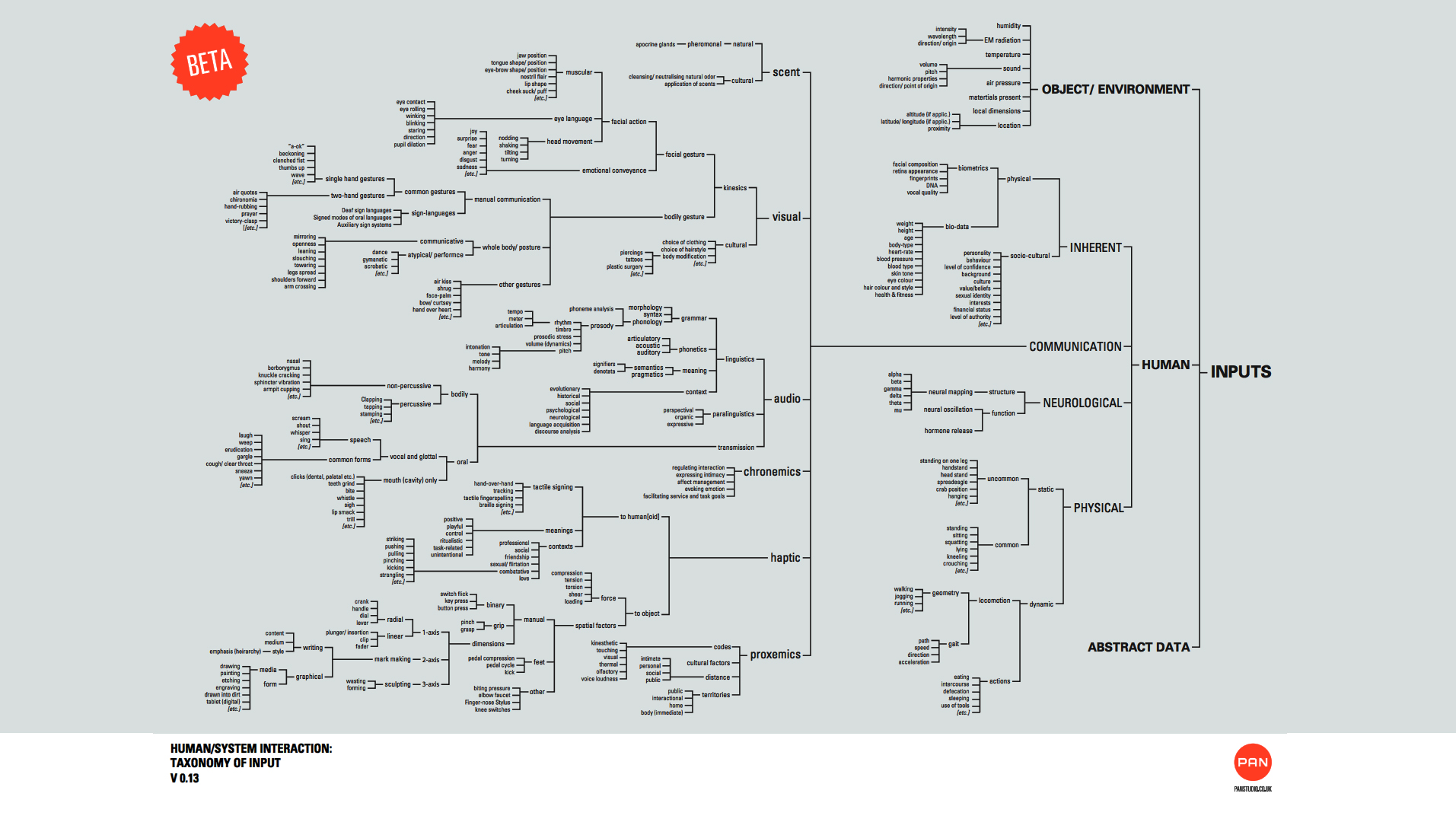

The version shown here focuses almost entirely on human-to-object input. The first public beta version published was v.0.13. (This is the current version.)

Conceived in a Pub

We had an idea a couple of months ago (one of those “made-sense-in-the-pub, does-it-still-makes-sense-in-the-morning?” type of ideas) that it’d be incredibly useful to have some kind of universal classification of interaction. Nothing fancy, just a straight-up, catch-all taxonomy of human-systems inter-relationships.

The idea was a hierarchial table; a neat, organised and absolutely correct information tree, sprouting in two directions from the middle, i.e. the computer, system, or thing. At one end would be “every” (see below) conceivable form of input, any kind of real-world data that a computer could make sense of (actually, or theoretically). These roots would converge into their parent ideas and link up to the centre. Then, in the other direction would shoot every conceivable output, any kind of effective change that could be made upon another thing, person or environment.

If suitably broad enough, any known or future ‘interaction’ object or installation would, in theory, be mappable through this process, whether it was a door handle, wind-chime, musical pressure-pad staircase or Google’s Project Glass.

We couldn’t find any existing tables of such breadth in the public domain (though correct me if I’m wrong). It was in fact hard enough to find even parts of such a table. So we decided, tentatively, to see how far we could get making our own. Some of the issues that cropped up were surprising, whilst others which loomed ahead in the distance remained obstinately present until we were forced to address them.

Complications and Definitions

There are a couple of important things to be clear on, it seems, when putting together any kind of taxonomy. One of them is choosing an appropriate ordering of common properties. For example, should “physical communication” come under physical activity (alongside walking, eating and other non-communicative movement) or under communication (alongside verbal and haptic communication)? Conventionally, the three elements of an interaction are assumed to be: A person, an object, and an environment. Any fundamental interaction typically occurs between any two, e.g. person-person, person-object, object-environment, etc. These elements seemed like an appropriate fundamental trinity, from which everything else could stem from.

The difference between an object and environment, however, seems mostly (though not unilaterally) to be a matter of scale. Especially when it comes to methods of analysis (temperature, colour, density etc.). We also thought it best to include “abstract data” as a source, essentially to represent any data that might have been created randomly, or where the original relevance had been lost through abstraction.

It can often be desirable to describe something in two different ways. For example, a camera might help recognise which facial muscles are in use (to achieve nostril flair or raise an eyebrow), but it might also be desirable for an object to recognise these signifiers contextually, as a likely indicators of mood (e.g. anger, happiness, fear).

Another issue is granularity. How fine (how deep) should an inspection go? Tunnelling deeper into each vein of enquiry it becomes difficult to know when to stop, and challenging to maintain consistency.

When we talk about covering “every” kind of input, we mean that it should be possible for an organisation system like this to be all-encompassing without necessarily being exhaustive. A system that broadly refers to “audio input” can encompass the notions of “speech” and “musical instruments”, or incorporate properties like “volume” and “timbre”, without necessarily making distinctions between any of them.

Sarcasm and Toasters

One decision we made early on was to categorise human inputs by their common characteristics, not by the input mechanism that would record them. This was because there might be more than one way of recording the same thing (e.g. movement could be recorded by systems as diverse as cameras, sonar and accelerometers). This created an interesting side-effect, as the taxonomy shifted into a far more complex study of human behaviour and bio-mechanics; “what can people do”. Whilst studying areas of audio, visual and haptic communication, we were especially struck by the sense we were writing the broad specifications for a savvy, sympathetic AI – a successful android/ cylon/ replicant; i.e. something capable of reading the full range of human action.

Imagine, for example, what it would require for an object to appreciate sarcasm – a toaster, let’s say, that can gauge volume, intonation, emphasis, facial expression, choice of language, timing, socio-cultural circumstances… – estimate a probability of irony and then respond accordingly.

Such capacity would avoid situations like this:

Does it have a Future?

To develop this project is going to require some input from a few experts-in-their-fields; learned specialists (rather than generalists like myself with wiki-link tunnel vision). The taxonomy needs expanding upon (‘outputs’ at the moment are entirely non-existent), re-organising and probably some correcting. If you would like to contribute and you’re a linguist, anthropologist, roboticist, physiologist, psychologist, or see some territory or area you could assist with, your insight would be appreciated.

As our research has indicated, there is often more than one way of classifying interactions. But a hierarchy demands we always use the most applicable interpretation. All taxonomies are artificial constructs, of course, but a hierarchy seems to exacerbate rigid pigeon-holing of ideas. Perhaps the solution is to evolve into something less linear and absolute than a hierarchial taxonomy (like the nested folders of an OS), and instead consider something more nebulous and amorphous (for example the photo-sets and tags in Flickr).

It would also be great to have overlays; optional layers of additional information (such as examples of use, methodologies and necessary instruments). Perhaps really the solution needs to be a bit more dynamic. Like an interactive app or site. I wonder if the most effective solution might be an industry-powered interaction-centric wiki. Such a project would be non-trivial, and would require mobilising a fair few effective contributors. The Interaction Design Foundation have a V1.0 Encyclopaedia, and an impressive number of authors. And yet, their chapter led approach to ownership make this quite different from the more democratic ‘wiki’ approach.

Credit

Justas did a great job pulling the early research together. A special thanks is also in order for Gemma Carr and Tom Selwyn-Davis who helped research and compile this chart.